The weight of the automotive and tech industries is fully behind the move toward self-driving cars. Cars with “limited autonomy”—i.e., the ability to drive themselves under certain conditions (level 3) or within certain geofenced locations (level 4)—should be on our roads within the next five years.

But a completely autonomous vehicle—capable of driving anywhere, any time, with human input limited to telling it just a destination—remains a more distant goal. To make that happen, cars are going to need to know exactly where they are in the world with far greater precision than currently possible with technology like GPS. And that means new maps that are far more accurate than anything you could buy at the next gas station—not that a human would be able to read them anyway.

Fully aware of this need, car makers like BMW, Audi, Mercedes-Benz, and Ford have been voting with their wallets. They’re investing in companies like Here and Civil Maps that are building the platforms and gathering the data required. The end result will be a high-definition 3D map of our road networks—and everything within a few meters of them—that’s constantly updated by vehicles as they drive along.

HD Maps for these territories

Here started work building HD maps back in 2013, according to Sanjay Sood, the company’s VP for highly automated driving. “The notion of an HD map was created during a joint project with Here and Daimler for the ‘Birth of Benz’ drive, and we developed a core technology and HD map that was created as a research prototype in order to facilitate the functioning of that car driving through the German countryside,” he told Ars. “When it comes to automated driving, the map becomes another sensor that helps the automated vehicle make decisions.”

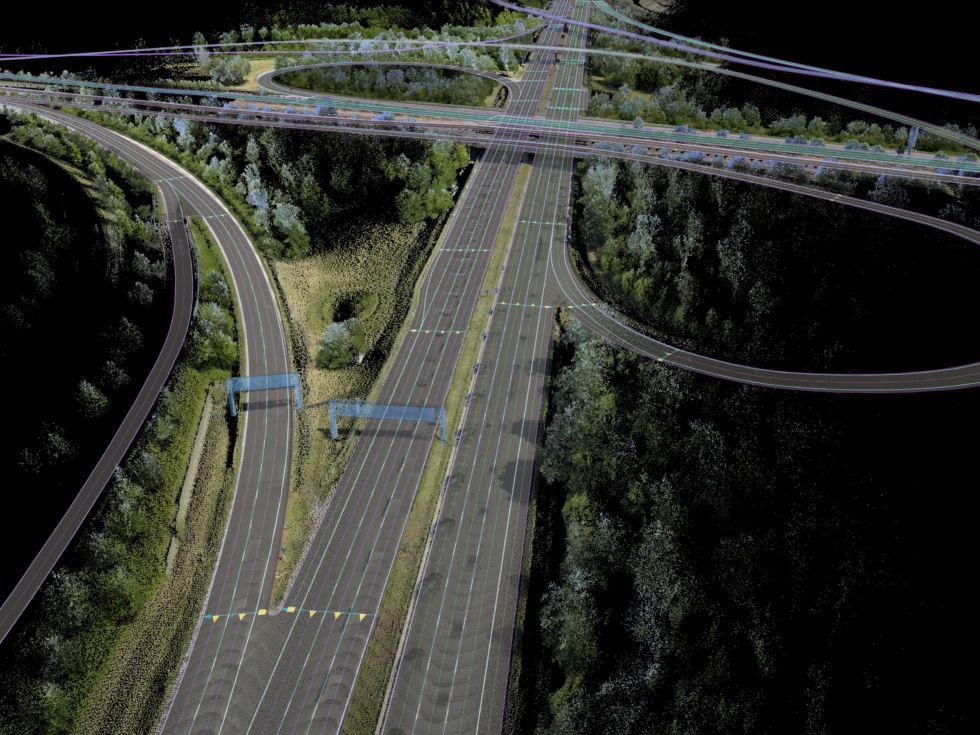

The first step is to create the initial map, which involves sensor-encrusted mapping vehicles that put Google’s Streetview cars to shame. Here uses a fleet of vehicles equipped with a roof-mounted sensor mast that packs 96 megapixels’ worth of cameras, a 32-beam Velodyne LIDAR scanner, and highly accurate Novatel GPS Inertial Measurement Units. These mapping vehicles drive around creating a 3D scan of the road and its surroundings that gets sent to Here’s cloud. From the cloud, that data is incorporated into a cm-accurate digital recreation of the real world.

“Starting last year, we’re essentially building the road network in order to have this map available for the first fleets of cars that are going to be leveraging this technology that are going to be showing up on the roads around 2020,” said Sood. “So we have to seed that ecosystem with a map.”

Likewise, Civil Maps just announced its Atlas DevKit, a plug-and-play solution developed for its customers. “Advanced localization, map creation, and crowdsourcing of maps are key challenges facing those hoping to test and deploy autonomous vehicle technology,” said Sravan Puttagunta, co-founder and CEO of Civil Maps. “The Atlas DevKit platform accelerates the pace of innovation by enabling developers to quickly and economically localize vehicles, build dynamic maps, and crowdsource that critical information with other cars in real time.”

Like Here, Civil Maps’ Atlas DevKit uses a roof-mounted mast (that can be configured with different sensors), although it also has a cheaper version that can utilize the optical, ultrasonic, and radar sensors that are already festooning many a new vehicle.

It looks like you’re driving: Do you want me to track changes?

Of course, “drawing” a map once won’t be sufficient for a technology to which humans are supposed to trust their lives. Rather, maps will need to be constantly updated to reflect closures, road works, and other major obstacles.

Here has a couple of approaches to solving that problem. “We have hundreds of these mapping vehicles deployed around the globe,” Sood explains. “We have very in-depth relationships with many cities and regional authorities. So typically when there’s a large construction project happening, we know well before it even starts, and in many situations we can drive our vehicles there before the roads are open to the public.” That will allow Here to deploy updated maps (which typically arrive as 2km-by-2km tiles from Here’s cloud) to vehicles the day a certain road is opened or closed.

But a more scalable solution involves leveraging the embedded sensors in cars already using HD maps to navigate. “Today, many high-end vehicles not only have LTE connections but an array of advanced sensors on them; a forward-looking camera, forward and rear radar, and we’re working with numerous OEMs on getting that sensor data transmitted into our cloud,” said Sood. That’s going to mean millions of data points being constantly uploaded to the cloud, where Here’s servers will do the heavy lifting of making sense out of it all.

“We have machine learning algorithms and aggregation algorithms that sift through that data and try distilling changes from noise or aberrations in the world. Once we’ve detected those changes we update the map and then deploy that to the vehicle,” explained Sood. As you might imagine, that has also meant coming up with a set of standards—called Sensoris—so that different makes of cars aren’t uploading wildly different kinds of data. After all, a BMW 7 Series might use a more expensive radar sensor than a Volkswagen Golf, mounted in a different spot, meaning each gets a slightly different view of the world.

“How do you take all this heterogenous data and then make sense of it when you put it into a big data lake?” Sood told us. “That’s where a lot of the specialized skills that Here has come to the forefront.”

Not just graphics cards

You may only know it for its GPUs, but Nvidia is becoming quite the player in this field, too, thanks to the company’s expertise with machine learning and deep neural networks. Those have plenty of applications in the self-driving world, and Nvidia is working with Here (and recently bought a stake in the company), as well as with TomTom, Baidu, and Zenrin, on mapping and cloud-to-car platforms. “HD maps are essential for self-driving cars,” said Jen-Hsun Huang, founder and chief executive officer of Nvidia. “Here’s adoption of our deep learning technology for their cloud-to-car mapping system will accelerate automakers’ ability to deploy self-driving vehicles.”

Nvidia’s technology—along with Intel’s—will also be required to slim down the bandwidth bills that will surely follow a crowdsourcing mapping fleet. “We’re developing a compute platform for the car, where the car itself does the change detection,” Sood said. “A lot of the change detection currently happens in the cloud. Once you have cars that actually have the map in the vehicle, that are running around the world, our goal is to have the cars themselves do the change detection. So what it sends up to the cloud isn’t a stream of sensor data but specifically, ‘here’s what’s changed in the environment;’ the differences between what the car sees and what the map says.” That means optimizing all that deep learning and sensor fusion to work onboard the car rather than in a powerful server farm.

Listing image by Here